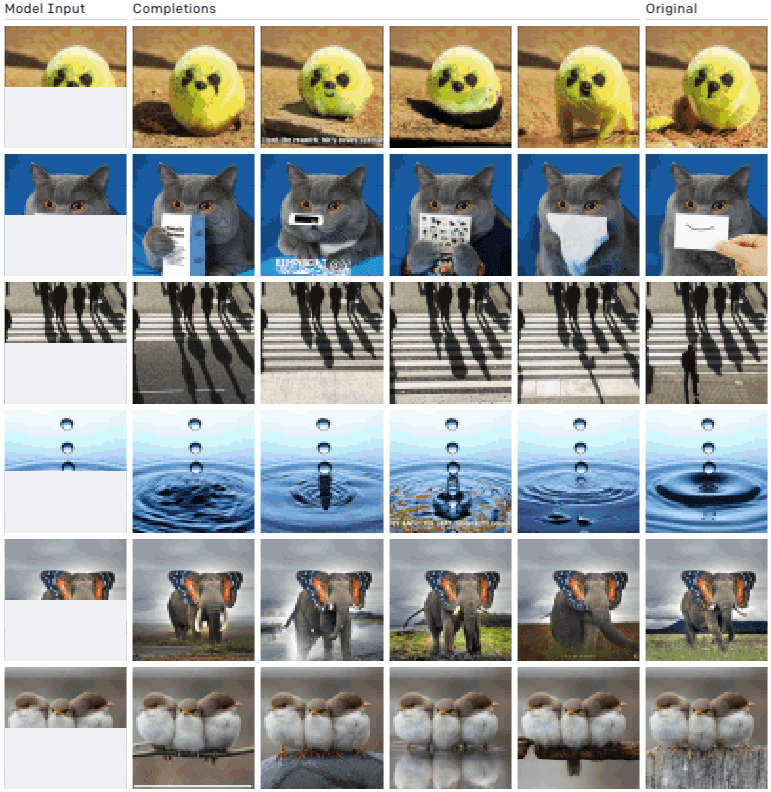

At its core, GPT-2 is a powerful prediction engine. It learned to grasp the structure of the English language by looking at billions of examples of words, sentences, and paragraphs scraped from the corners of the internet. With that structure, it could then manipulate words into new sentences by statistically predicting the order in which they should appear. So researchers at OpenAI decided to swap the words for pixels and train the same algorithm on images in ImageNet, the most popular image bank for deep learning. Because the algorithm was designed to work with one-dimensional data (i.e., st…

Other old stories

https://tekraze.com/2020/07/hacking-of-high-profile-twitter-users-prompts-fbi-investigation/

https://tekraze.com/2020/07/google-indias-reliance-to-develop-entry-level-smartphone/

https://tekraze.com/2020/07/making-work-from-home-work-for-your-mental-health/

https://tekraze.com/2020/08/social-media-influencing-apps/

https://tekraze.com/2018/08/the-right-way-to-ode-and-syntax-you-need-to-follow/

Keep reading.

obviously like your website however you need to test the spelling on several of your posts.

Several of them are rife with spelling problems and I to find

it very bothersome to tell the truth nevertheless I’ll certainly come back again.

Here is my blog post Sourced Consulting

Hi, Thanks for liking the content, keep visiting